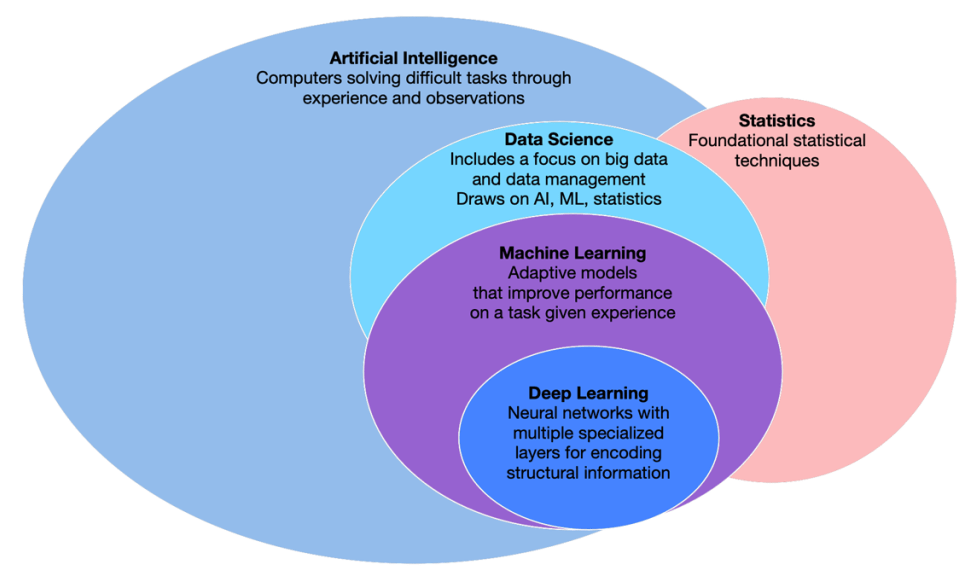

Statistics, big data, machine learning (ML), artificial intelligence (AI), deep learning (DL), natural language processing (NLP), and natural language understanding (NLU) are crucial to business and society today. These technologies work together to enable organizations to extract valuable insights from massive datasets, optimize operations, and drive automation. They also enhance customer service and enable sophisticated image and video analysis, such as object detection, facial recognition, and image classification. Additionally, they power speech recognition systems in virtual assistants like Siri and Alexa, and improve NLP tasks like sentiment analysis, language translation, and text generation. Together, these innovations help businesses make informed, data-driven decisions and create positive impacts across industries like healthcare, education, and research. However, they also raise ethical and privacy concerns, emphasizing the need for responsible development and regulation to ensure societal benefits while minimizing risks (Hanna et al., 2025). I found the diagram below to nicely capture at a broader level what these technologies cover (McGovern & Allen, 2021):

This week, as part of our Business Perspectives course, we began exploring statistics, covering key topics such as basic statistics, covariance and correlation, probability, regression, distributions, sampling, the Central Limit Theorem, and inference. These tools help us summarize data, identify patterns, and make evidence-based decisions. We also discussed the rise of Big Data and the importance of data visualization in making complex datasets more accessible and actionable, ultimately enhancing decision-making.

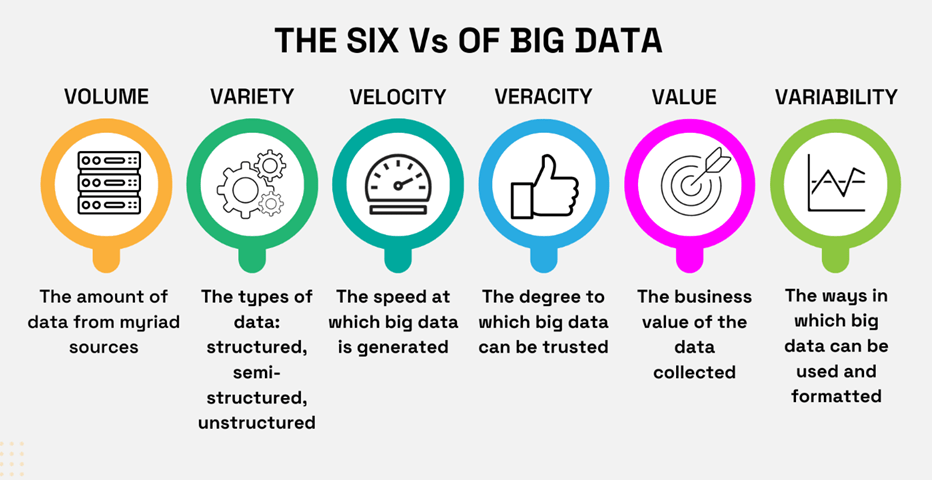

The evolution of the “Vs” in big data has expanded from the original 3Vs (Volume, Velocity, and Variety) to include additional dimensions that better capture the growing complexity of data. Initially, the 3Vs focused on the massive amounts of data (Volume), the speed at which it is generated and processed (Velocity), and the diverse types of data (Variety). As big data grew in both scale and importance, a fourth “V,” Veracity, was added to address the reliability and accuracy of data. The concept then expanded further with Value, emphasizing the need to extract meaningful insights from raw data. More recently, Variability has been included to account for the changing nature of data and the importance of effective representation for decision-making. Each additional “V” highlights a unique challenge in managing and analyzing big data, reflecting its increasing role in shaping modern business and technology. The number of Vs seems to keep increasing, but I think the following diagram captures the bulk of what’s important (Gergely, 2023):

The technologies have experienced rapid evolution since the 1980s. Each discipline has its origins in research and development spanning multiple decades, influenced by advancements in technology, computing power, and theoretical insights. Statistics has long been foundational in data analysis, but with the advent of big data and machine learning in the 1990s and 2000s, the landscape transformed. Machine learning methods, empowered by vast datasets and increasing computational abilities, have become integral to AI systems, driving advancements in deep learning, NLP, and NLU. These technologies, particularly in recent years, are reshaping industries from healthcare to finance, while also raising important questions about ethics and society. The following timeline visual illustrates this evolution from the 1980s to the present day.

- 1980s: Foundations in Statistics and AI

- The 1980s were foundational for the development of statistical methods and early AI models. Statistical learning theory emerged as a formal framework for analyzing algorithms, and some business and research decision-making.

- Early work on neural networks, though rudimentary, laid the groundwork for later developments in deep learning.

- Saw the growth of ML as a subfield of AI, focusing on pattern recognition and predictive models.

- 1990s: Emergence of ML and Big Data

- In the 1990s, the rise of machine learning as a subfield of AI marked a turning point. Statistical learning models such as support vector machines (SVM) and decision trees gained traction.

- Emergence of big data as organizations begin handling large datasets and early NLP models to improve text analysis

- New data storage and analysis technologies, including data warehouses, were developed.

- Introduction of computer vision for image processing, setting the stage for automated medical imaging.

- 2000s: The Rise of AI and Big Data

- The 2000s saw significant advancements in computational power, leading to more efficient machine learning algorithms and the advent of “big data” tools, including Hadoop and MapReduce.

- AI systems began to outperform humans in specific tasks, such as playing games (e.g., IBM’s Deep Blue defeating Garry Kasparov in chess).

- Growth of DL and neural networks, enhancing AI capabilities.

- Introduction of medical image processing for detecting anomalies in X-rays and MRIs.

- 2010s: Deep Learning and NLP Breakthroughs

- Deep learning, particularly the use of neural networks with many layers (deep neural networks), revolutionized the AI landscape. This led to breakthroughs in areas like medical image processing (Esteva et al., 2017) enabling AI-driven diagnostics (e.g., detecting tumors, fractures, skin cancer, and retinal diseases), state-of-the-art in speech recognition, visual object recognition, object detection and many other domains such as drug discovery, genomics, and NLP (LeCun, Bengio, & Hinton, 2015).

- NLP saw rapid advancements, with the introduction of models like word embeddings (e.g., Word2Vec) and recurrent neural networks (RNNs), which significantly improved language modeling and understanding.

- Transformer-based models enhanced NLP and NLU (e.g., BERT, GPT).

- 2020s: The Era of Transformative AI and NLP

- Models like GPT (Generative Pre-trained Transformer) marked the next phase of AI and NLP, achieving human-like text generation and comprehension.

- NLU and NLP technologies became more sophisticated, with advancements in transformer-based architectures, making applications like real-time language translation and conversational AI commonplace.

- Deep learning continues to revolutionize NLP, chatbots, and virtual assistants.

- AI-driven medical imaging tools get FDA-approved for automated detection of certain diseases (e.g., AI-assisted cancer diagnosis).

- Ethical considerations around AI, bias, and responsible use of big data become key topics.

As we leverage advancements in these technologies, it is crucial to remain aware of potential biases and misinterpretations that can lead to flawed conclusions. Common pitfalls include (Hanna et al., 2025):

- Sampling Bias – Using a non-representative dataset can distort results.

- Misleading Correlations – Just because two variables correlate does not mean one causes the other.

- Ignoring Context – Seasonal or temporary trends can be mistaken for long-term patterns.

By integrating statistical knowledge with critical thinking, we can analyze data responsibly and avoid misleading conclusions. Whether in business, healthcare, or finance, mastering data-driven decision-making is essential for success.

Are you ready to unlock the full potential of data? The insights are there—you just need to interpret them wisely!

References:

Esteva, A., Kuprel, B., Novoa, R. A., Ko, J., Swetter, S. M., Blau, H. M., & Thrun, S. (2017). Dermatologist-level classification of skin cancer with deep neural networks. Nature, 542(7639), 115-118. https://pubmed.ncbi.nlm.nih.gov/28117445/

Gergely, S. (2023, December 9). Big data in procurement: A quick guide. Veridion. https://veridion.com/blog-posts/big-data-procurement/

Hanna, M. G., Pantanowitz, L., Jackson, B., Palmer, O., Visweswaran, S., Pantanowitz, J., Deebajah, M., & Rashidi, H. H. (2025). Ethical and bias considerations in artificial intelligence/machine learning. Modern Pathology, 38(3), 100686. https://doi.org/10.1016/j.modpat.2024.100686

LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436-444. https://www.nature.com/articles/nature14539

McGovern, A., & Allen, J. (2021, October 6). Training the next generation of physical data scientists. Eos. https://eos.org/opinions/training-the-next-generation-of-physical-data-scientists

Leave a comment